“Unlocking Creativity: How ChatGPT Can Transform Your Novel Research Into a Thrilling Journey”

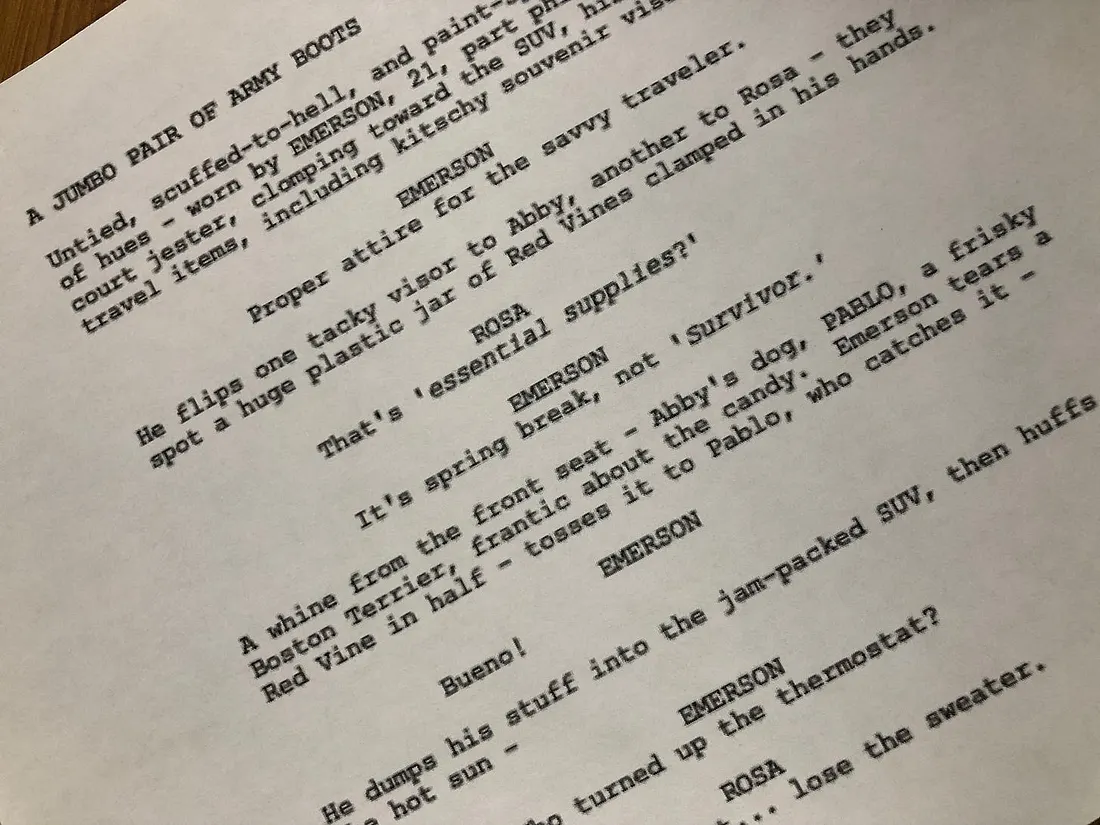

I have no interest in writing my novels using AI. My novels should be mine, not somebody else’s.

But a lot of people use AI as a research tool. As a better version of Google.

What Could Possibly Go Wrong?

You’ve probably heard that ChatGPT and other tools that use “large language models” are prone to hallucination. Meaning they tend to make up an answer when they don’t know.

What’s the story here? True or not true?

I recently had a research question that I took to ChatGPT to see how it would do. As some of you know, I write historical novels, and I’ve read the works of many historians over the years. I’ve read so many, it’s sometimes hard to remember who exactly said what.